P11: DesAna-ConcRespExp

Design and analysis of complex concentration-response experiments

In project P11 (Design and analysis of complex concentration-response experiments), we will develop methods for the design and analysis of concentration-response data for which common simplifying modelling assumptions do not hold. In particular, we will consider the situation in which the experimental data are affected by relatively high variability between independent experiments, e.g. due to daily effects, and by heteroscedastic errors, i.e. when the variance of the errors is not constant across observations. Moreover, we will address the problem of designing an experiment for a toxic compound for which no prior information is available.

In Schürmeyer et al. (2024, manuscript under revision), we investigated the performance of different designs, i.e. sets of concentrations, in toxicological concentration-response experiments in terms of the precision of the statistical analysis. We considered a toxic compound, for which sufficient prior knowledge was available. Based on this prior knowledge a new concentration-response experiment was designed using different design strategies. Then the corresponding experiment was conducted, resulting in a huge data set of observations on the effect at 50 different concentrations. We used this data set to analyse the performance of the different strategies, in particular the commonly used log-equidistant design strategy and a statistically motivated design strategy. Under the assumption that the data can be described by a four-parametric log-logistic model (4pLL model) with homoscedastic errors, we investigated the distances between the model fits based on the complete data set and the model fits based on randomly drawn subsets corresponding to the considered design strategies. We proved that the statistically motivated design strategy outperforms the commonly used log-equidistant strategy. However, we also observed that using model fits based on the homoscedastic 4pLL model did not perfectly describe the relationship between the concentration and the response, as there are both heteroscedastic errors and daily effects.

Therefore, we will first investigate modelling approaches that consider heteroscedastic errors. Initially, we will model the variances of the data by known functions of the concentration. Then, we will also include variance functions that depend on additional unknown parameters that have to be estimated. Finally, we will derive optimal designs for the developed models both by using established design criteria like D-optimality and by deriving new criteria that address the precise estimation of certain alert concentrations. Moreover, we will develop optimality criteria for checking whether the assumption of homoscedastic errors is appropriate (Lanteri et al., 2023).

In a further step, we will address the challenge of daily effects within the experiments. As the day on which the experiment was conducted seems to affect the data, the assumption that data resulting from experiments on different days can be described without considering the daily effect is questionable. We will design experiments for testing the occurrence of daily effects. For cases, in which the inclusion of the daily effects is necessary, we will develop appropriate models. One approach will be that the concentration-response relationships at different days are similar (but not equal) such that the different resulting concentration-response curves might share some parameters (but not all). Others will be based on mixed-effects models.

In Schürmeyer et al. (2024, manuscript under revision), the statistically motivated design strategy was based on specific assumptions about the shape of the concentration-response relationship. As soon as prior information about the toxic compound is available, this strategy is applicable. However, if a new toxic compound should be analysed and there is no access to prior information, the proposed approach fails. We will thus develop design strategies that can be used without any prior information about the considered compound. In particular, we will derive optimal designs for non-parametric modelling approaches like GAMs and GAMLSs, which are very flexible to fit different shapes. Moreover, we will develop optimal designs especially applicable for model identification. This will be also based on existing approaches motivated by model selection or model averaging, see Alhorn et al. (2021). All designs will be developed for the setup of non-sequential as well as sequential experiments.

In all considered scenarios, sample size calculation (for each sequence) is of crucial importance. More precisely, the number of replicates at the different concentrations has to be fixed under certain budget constraints of the complete experiment. We will consider different approaches to address this problem. First, sample size calculation based on confidence intervals for specific alert concentrations of interest will be evaluated and compared with a sequential sample size calculation. In the latter, the sample size is determined beforehand but the experiments are planned in two (or more) sequences. After a pre-specified number of experiments, the length of the confidence interval for the parameter of interest is evaluated. If the range falls below some pre-defined boundary, further samples do not need to be considered and therefore less budget is required. In the second step, the focus will be on different sampling strategies. More precisely, we will investigate in which situation it is more appropriate to produce more replicates at a small number of different concentrations than to use fewer replicates at a high number of different concentrations. Here, we will also use the huge data set from Schürmeyer et al. (2024, manuscript under revision) to analyse the different strategies. In particular, we will evaluate their performance from multiple perspectives (e.g. in terms of location and variation). Inspired by Herrmann et al. (2020), we will develop a one-dimensional score for the different evaluation measures, which also includes the budget aspect. Finally, we will use this score for defining optimal sample size strategies, see Herrmann et al. (2022).

References

- Alhorn K, Dette H, Schorning K (2021). Optimal Designs for Model Averaging in non-nested Models. SankhyaA 83, 745-778. doi: 10.1007/s13171-020-00238-9

- Herrmann C, Pilz M, Kieser M, Rauch G (2020). A new conditional performance score for the evaluation of adaptive group sequential designs with sample size recalculation. Statistics in Medicine 39(15), 2067-2100. doi: 10.1002/sim.8534

- Herrmann C, Kieser M, Rauch G, Pilz M (2022). Optimization of adaptive designs with respect to a performance score. Biometrical Journal 64(6), 989-1006. doi: 10.1002/bimj.202100166

- Lanteri A, Leorato S, López-Fidalgo J, Tommasi C. (2023). Designing to detect heteroscedasticity in a regression model, Journal of the Royal Statistical Society Series B: Statistical Methodology 85, 2. doi: 10.1093/jrsssb/qkad004

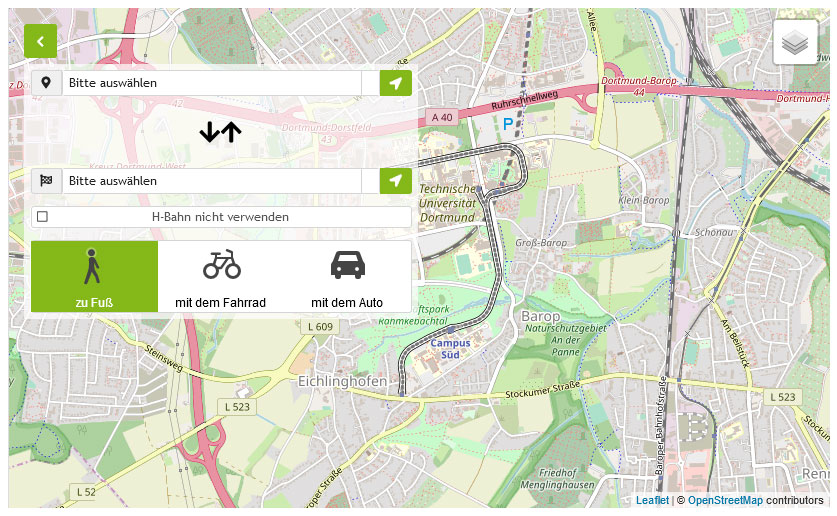

- Schürmeyer L, Hengstler JG, Schorning K (2024). Open Access Shiny App for the Determination of Designs for in vitro cytotoxicity experiments. Link to App: http://shiny.statistik.tu-dortmund.de:8080/app/occe